Maeda Lab: 2009–2010

Dept. of Systems Design, Div. of Systems Research, Faculty of Engineering

/Dept. of Mechanical Engineering, Div. of Systems Integration, Graduate School of Engineering

/Div. of Mechanical Engineering and Materials Science, School of Engineering,

Yokohama National University

Bldg. of Div. of Mechanical Engineering and Materials Science #1,

79-5 Tokiwadai, Hodogaya-ku, Yokohama, 240-8501 JAPAN

Tel/Fax +81-45-339-3918 (Dr. Maeda)/+81-45-339-3894 (Lab)

E-mail webmaster[at]iir.me.ynu.ac.jp

http://www.iir.me.ynu.ac.jp/

People (2009–2010 Academic Year)

- Dr. Yusuke MAEDA (Associate Professor, Dept. of Systems Design, Div. of Systems

Research, Fac. of Engineering)

- Ph.D. Student (Dept. of Mechanical Engineering, Div. of Systems Integration, Graduate School of

Engineering)

- Master’s Students (Dept. of Mechanical Engineering, Div. of Systems Integration, Graduate School

of Engineering)

- Yoshinobu GOTO

- Yuuichi MOTOKI

- Ryo YOKOI

- Takuya OGAWA

- Kensuke OKITA

- Naoki KODERA

- Takumi WATANABE

- Undergraduate Students (Div. of Mechanical Engineering and Materials Science, School of

Engineering)

- Masahiro UTSUMI

- Asumi ONO

- Yuki MORIYAMA

Analysis and Planning of Robotic Manipulation

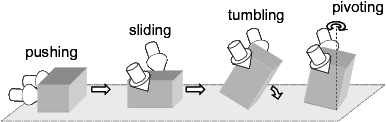

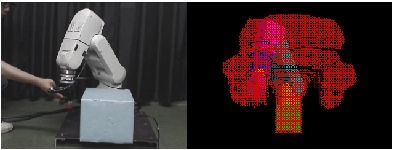

Manipulation is one of the most fundamental topics of robotics. We study contact mechanics of graspless

manipulation (manipulation without grasping, Fig. 1) and power grasp (grasping with not

only fingertips but also other finger surfaces and palm) [1]. Based on the contact mechanics,we analyzed the robustness [2] and internal forces of robotic manipulation [3]. We are alsodeveloping methods for automatic generation of robot motions for dexterous manipulation [4](Fig. 2).

References

[1] Y. MAEDA, K. ODA and S. MAKITA: Analysis of Indeterminate Contact Forces in Robotic Grasping and Contact Tasks, Proc. of 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2007), pp. 1570–1575, 2007.

[2] Y. MAEDA and S. MAKITA: A Quantitative Test for the Robustness of Graspless Manipulation, Proc. of 2006 IEEE Int. Conf. on Robotics and Automation (ICRA 2006), pp. 1743-1748, 2006.

[3] Y. MAEDA: On the Possibility of Excessive Internal Forces on Manipulated Objects in Robotic Contact Tasks, Proc. of 2005 IEEE Int. Conf. on Robotics and Automation (ICRA 2005), pp. 1953–1958, 2005.

[4] Y. MAEDA and T. ARAI: Planning of Graspless Manipulation by a Multifingered Robot Hand, Advanced Robotics, Vol. 19, No. 5, pp. 501-521, 2005.

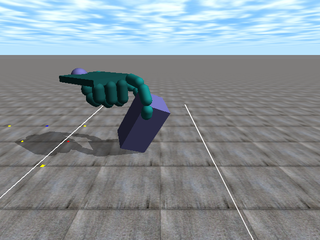

Caging Manipulation

Caging is a method to constrain objects geometrically so that they cannot escape from a “cage”

constituted of robot bodies. We study robotic manipulation with caging, or “caging manipulation.”

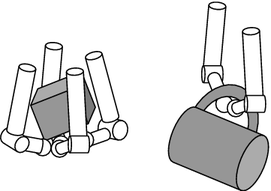

3D multifingered caging

While most of related studies deal with planar caging, we study three-dimensional caging by multifingered robot hands (Fig. 3, 4). Caging does not require

force control, and therefore it is well-suited to current robotic devices and contributes

to provide a variety of options of robotic manipulation. We are investigating sufficient

conditions for 3D multifingered caging and developing an algorithm to plan finger

configurations based on the conditions [1].

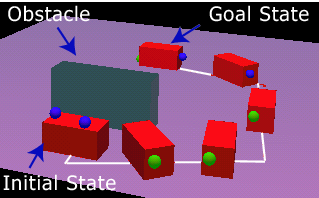

Caging manipulation with environment

In previous studies on robotic caging, constructing a “cage” only by robot bodies was considered. On the other hand, we can use not only

robot bodies but also the environment such as walls to achieve caging (Fig. 5). It will

help to achieve caging by smaller number of robots and that in a narrow space. However,

there is a difficulty in caging manipulation with the environment. In conventional caging,

manipulation can be achieved simply by translation of robots. That does not hold in caging

manipulation with the environment. Therefore we are studying the manipulability and a

planning algorithm in caging manipulation with the environment [2].

References

[1] S. MAKITA and Y. MAEDA: 3D Multifingered Caging: Basic Formulation and Planning, Proc. of 2008 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2008), pp. 2697–2702, 2008.

[2] T. KOBAYASHI and Y. MAEDA: Planning of Caging Manipulation Using the Environment, Proc. of Mechanical Engineering Congress 2008 Japan, Vol. 5, pp. 191–192, 2008 (in Japanese).

Teaching Industrial Robots

Teaching is indispensable for current industrial robots to execute tasks. Human operators have to teach

motions in detail to robots by, for example, conventional teaching playback. However, robot teaching is

complicated and time-consuming for novice operators and the cost for training them is often unaffordable

in small-sized companies. Thus we are studying easy robot programming methods for the dissemination

of robot utilization.

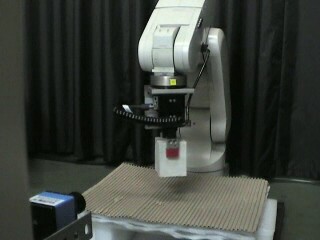

Robot programming with manual volume sweeping

We developed a robot programming method for part handling [1]. In this method, a human operator makes a robot manipulator sweep a volume by its bodies (Fig. 6). The swept volume stands for (a part of) the

manipulator’s free space, because the manipulator has passed through the volume without

collisions. Next, the obtained swept volume is used by a motion planner to generate a

well-optimized path of the manipulator automatically.

Robot programming by human demonstration

If we can program robots just by demonstrating tasks to them, robot teaching can be very intuitive even for novice operators. We are

developing some techniques for programming by demonstration, including one with random

pattern projection (Fig. 7, [2]) and one with view-based approach [3].

References

[1] Y. MAEDA, T. USHIODA and S. MAKITA: Easy Robot Programming for Industrial Manipulators by Manual Volume Sweeping, Proc. of 2008 IEEE Int. Conf. on Robotics and Automation (ICRA 2008), pp. 2234–2239, 2008.

[2] Y. GOTO, T. SHIMADA and Y. MAEDA: Realization of Robot Teaching by Human Demonstration with Random Pattern Projection, Proc. of 2008 JSME Conf. on Robotics and Mechatronics (Robomec 2008), 1A1–G14, 2008 (in Japanese).

[3] T. NAKAMURA and Y. MAEDA: View-Based Graspless Manipulation Using Virtual Environment, 50th Proc. of Japan Joint Automatic Control Conf., pp. 617-622, 2007 (in Japanese).

Handling of Various Objects by Robots

Techniques for robotic manipulation of a variety of objects are under investigation.

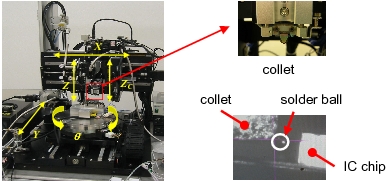

Handling of submillimeter-sized objects

We study handling of micro solder balls of 100 [µm] or smaller in diameter for joining electronic components. A method for reliable picking-up

of solder balls by a robot with a vacuum collet is developed (Fig. 8, [1] [2]) in cooperation with AJI co.,ltd. A simple force-control mechanism for the robot is also developed [3].

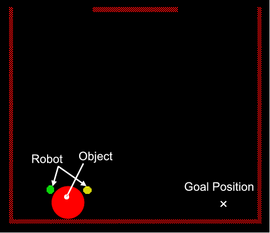

Object handling with multi-modal sensing for service robots

It is still difficult for current robots to recognize various objects in the home or office environment and perform

appropriate tasks for themselves. Thus the structured environment approach, where

information required for robots to handle objects is embedded in the environment, is a

practical and promising solution. We also adopt the structured environment approach:

two-dimensional code (QR code) is used for information embedding and object localization.

We additionally use laser displacement sensors for finer localization (Fig. 9).

References

[1] T. KOBAYASHI, Y. MAEDA, S. MAKITA, S. MIURA, I. KUNIOKA and K. YOSHIDA: Manipulation of Micro Solder Balls for Joining Electronic Components, Proc. of 2006 Int. Symp. on Flexible Automation, pp. 408–411, 2006.

[2] A. Matsumoto, K. Yoshida and Y. Maeda: Design of a desktop microassembly machine and its industrial application to micro solder ball manipulation, M. Gauthier and S. Régnier eds., Robotic Microassembly, Wiley, pp. 273–290, 2009 (to appear).

[3] S. MAKITA, Y. MAEDA, Y. KADONO, S. MIURA, I. KUNIOKA and K. YOSHIDA: Manipulation of Submillimeter-sized Electronic Parts Using Force Control and Vision-based Position Control, Proc. of 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2007), pp. 1834–1839, 2007.

[4] R. YOKOI and Y. MAEDA: Construction of a Robotic Handling System with Two-Dimensional Codes and a Laser Displacement Sensor, Proc. of Mechanical Engineering Congress 2008 Japan, Vol. 5, pp. 233–234, 2008 (in Japanese).

Analysis of Human Hands and Their Dexterity

The theory of robotic manipulation can be applied to analysis of human hands and their dexterity.

Understanding of human dexterity is very important to implement high dexterity on robots. We are

conducting some studies on modeling of human hands and skills.

Mechanical modeling of human fingertip forces

Human active fingertip forces in various postures are measured and modeled jointly with Digital Human Research Center, AIST

(Fig. 10, [1]). The mechanical model of human finger forces can contribute to the development of human-friendly products. We also use this model to estimate human

fingertip forces from EMG signals [2].

Modeling of human dexterous manipulation

We are investigating human skills in dexterous manipulation. Measurement and modeling of human graspless manipulation (Fig. 11) [3] [4] is performed to understand the intelligence in mobility or “mobiligence.”

References

[1] N. Miyata, K. Yamaguchi and Y. Maeda: Measuring and Modeling Active Maximum Fingertip Forces of a Human Index Finger, Proc. of 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2007), pp. 2156–2161, 2007.

[2] T. OGAWA, Y. MAEDA, K. FUKUI and N. MIYATA: Estimation of Human Fingertip Forces from EMG Signals Using a Muscloskeletal Model of Fingers, Proc. of 2009 Spring Meeting of Japan Soc. for Precision Engineering, pp. 57–58, 2009 (in Japanese).

[3] Y. Maeda and T. Ushioda: Hidden Markov Modeling of Human Pivoting, J. of Robotics and Mechatronics, Vol. 19, No. 4, pp. 444–447, 2007.

[4] T. USHIODA and Y. MAEDA: A Model of Human Pivoting Manipulation Based on CPG, Proc. of SICE Symp. on Systems and Information 2007, pp. 69–72, 2007 (in Japanese).

Reconfigurable Assembly Systems

In recent years, reconfigurability of manufacturing systems has been in great demand. Changes of system

configuration at low cost will help the systems cope with both predictable and unpredictable fluctuations

in production environment. For example, a manufacturing system can adapt to the increase of production

quantity if an additional device can be easily plugged into the system. For such easy reconfiguration,

“Plug & Produce” concept was proposed in IMS/HMS (Holonic Manufacturing Systems) project and a

holonic assembly system with Plug & Produce functionality was developed at Arai Lab, The University

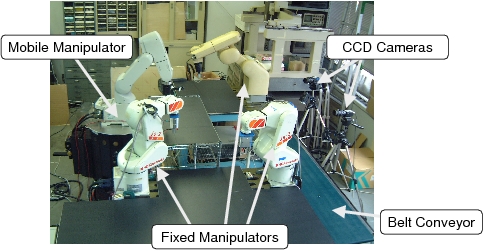

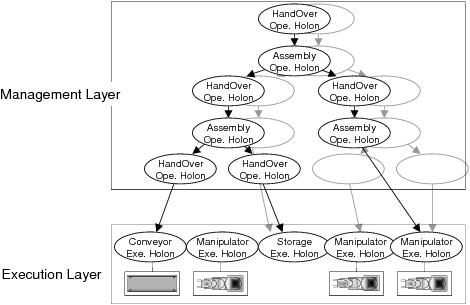

of Tokyo (Fig. 12, [1]). The holonic assembly system has a multi-agent architecture that enables easysystem reconfiguration (Fig. 13).

We are developing a reinforcement-learning-based method for on-line task assignment for

the holonic assembly system to achieve both high reconfigurability and high productivity

[2].

References

[1] Y. Maeda, H. Kikuchi, H. Izawa, H. Ogawa, M. Sugi and T. Arai: “Plug & Produce” Functions for an Easily Reconfigurable Robotic Assembly Cell, Assembly Automation, Vol. 27, No. 3, pp. 253–260, 2007.

[2] Y. MAEDA and N. SAKAMOTO: Online Task Assignment Based on Reinforcement Learning for a Multi-Agent Robotic Assembly System, Proc. of Mechanical Engineering Congress 2007 Japan, Vol. 7, pp. 263–264, 2007 (in Japanese).